CS 2223 Nov 10 2015

Expected reading: 308-314

Daily Exercise:

Another general shout!

I do believe that these applauses

are for some new honours

that are heap’d on Caesar.

Julius Caesar

William Shakespeare

1 Priority Queues

1.1 Sorting Summary

We could have spent several weeks on sorting algorithms, but we still have miles to go before we sleep, so let’s quickly summarize. We covered the following:

Insertion Sort

Selection Sort

Merge Sort

Quick Sort

For each of these, you need to be able to explain the fundamental structure of the algorithm. Some work by dividing problems into subproblems that aim to be half the size of the original problem. Some work by greedily solving a specific subtask that reduces the size of the problem by one.

For each algorithm we worked out specific strategies for counting key operations (such as exchanges and comparisons) and developed formulas to count these operations in the worst case.

We showed how the problem of comparison-based sorting was asymptotically bounded by ~N log N comparisons, which means that no comparison-based sorting algorithm can beat this limit, though different implementations will still be able to differentiate their behavior through programming optimizations and shortcuts.

1.2 Yesterday’s Daily Exercise

How did people fare on evaluating the recursive solution in terms of the maximum number of comparisons?

You should have been able to declare C(n) as the number of comparisons and then defined its behavior as:

C(N) = C(N/2) + C(N/2) + 1

assuming that N=2n as a power of 2.

C(N) = 2*C(N/2) + 1

C(N) = 2*(2*C(N/4) + 1) + 1

C(N) = 2*(2*(2*C(N/8) + 1) + 1) + 1

and this leads to...

C(N) = 8*C(N/8) + 4 + 2 + 1

since N = 2n and we are still at k=3...

C(2n) = 2k*C(N/2k) + (2k-1)

Now we can continue until k = n = log N, which would lead to...

C(2n) = 2n*C(N/2n) + (2n-1)

and since C(1) = 0, we have

C(N) = N = 1

1.3 Homework1

Some comments on HW1. First, when it comes to whether to use floor or ceiling, it matters and you have to pay attention to the details:

N | 1+floor(n/2) | 1+ceiling(n/2) |

1 | 1 | 2 |

2 | 2 | 2 |

3 | 2 | 3 |

4 | 3 | 3 |

5 | 3 | 4 |

6 | 4 | 4 |

7 | 4 | 5 |

8 | 5 | 5 |

9 | 5 | 6 |

You can retrieve feedback from the following link:

http://www.wpi.edu/~heineman/cs2223.

There appears to be some lingering homeworks to be graded; not sure how that happened, but I will look into this. Currently the average for HW1 is 87 with a standard deviation of 11. This demonstrates the majority of the students were able to address the material as presented on the HW.

1.4 Homework2

I completed my solution for HW2; in doing so, I found some interesting "Java things" that you will have to face and I need to explain them so you won’t be surprised when you complete your assignment.

Clarifying that each Item must be Comparable

A number of issues related to Java generics

A missing method. You need to implement remove as well. This is the case of an API that was not complete (p. 96)"

Important: I removed the performance constraint on the constructor because it was a lie.

And finally, when it comes to the performance as required, you will discover that you need to maintain your linked lists in sorted order to be able to achieve these requirements.

I also provide a JUnit test case that will be used to grade the validity of your implementation.

1.5 Priority Queue Type

In the presentation on the Queue type, there was some discussion about a type of queue in which you could enqueue elements but then dequeue the element of "highest priority." This is the classic definition of a Priority Queue. To describe this as an API, consider the following operations that would be supported (p. 309):

Operation | Description |

MaxPQ(n) | create priority queue with initial size |

insert | insert key into PQ |

delMax | return and remove largest key from PQ |

size | return # elements in PQ |

isEmpty | is the priority queue empty |

There are other elements, but these are the starting point.

In our initial description, the Key values being inserted into the PQ are themsevles primitive values. In the regular scenario, the elements are real-world entities which have an associated priority attribute.

One solution is to maintain an array of elements sorted in reverse order by their priority. With each request to insert a key, place it into its proper sorted location in the array.

Now, you can use binary array search to find where the key can be insertedm but then you might have to move/adjust N elements to insert the new item into its position.

But doesn’t this seem like a lot of extra work to maintain a fully sorted array when you only need to retrieve the maximum value?

You could keep all elements in unsorted fashioin and then your delMax operation will take time proportional to the number of elements in the PQ.

No matter how you look at it, some of these operations take linear time, or time proportional to the number of elements in the array. Page 312 summarizes the situation nicely:

Data Structure | insert | remove max |

sorted array | N | 1 |

unsorted array | 1 | N |

impossible | 1 | 1 |

heap | log N | log N |

The alternate heap structure can perform both operations in log N time. This is a major improvement and worth investigating how it is done.

1.6 Heap Data Structure

We have already seen how the "brackets" for tournaments are a useful metaphor for finding the winner (i.e., the largest value) in a collection. It also provides inspiration for helping locate the second largest item in a more efficient way than searching through the array of size N-1 for the next largest item.

The key is finding ways to store a partial ordering among the elements in a binary decision tree. We have seen this structure already when proving the optimality of comparison-based sorting.

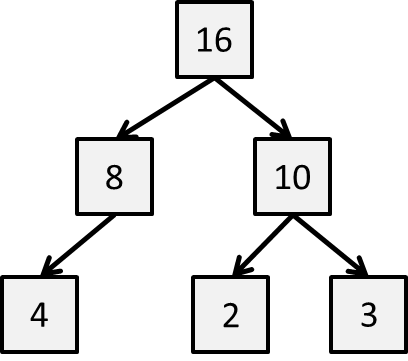

Consider having the following values {2, 3, 4, 8, 10, 16} and you want store them in a decision tree so you can immediately find the largest element.

You can see that each box with children is larger than either of them. While not fully ordered, this at least offers a partial order. The topmost box is the largest value, and the second largest value is one of its two children. This looks promising on paper, but how can we store this information efficiently?

1.7 Benefits of Heap

We have already seen how the concepts of "Brackets" revealed an efficient way to determine the top two largest elements from a collection in n + ceiling(log(n)) - 2 which is a great improvement over the naive 2n-3 approach. What we are going to do is show how the partial ordering of elements into a heap will yield interesting performance benefits that can be used for both priority queues (today’s lecture) and sorting (Thursday’s lecture)

Definition: A binary tree is heap-ordered if the key in each node is larger than or equal to the keys in that node’s two children (if they exist).

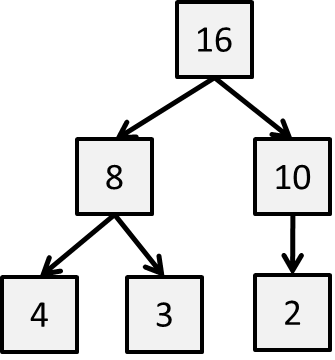

But now we add one more property often called the heap shape property.

Definition: A binary tree has heap-shape if each level is filled "in order" from left to right and no value appears on a level until the previous level is full.

While the above example satisfies the heap-ordered property, it violates the heap-shape property because the final level has a gap where a key could have been placed.

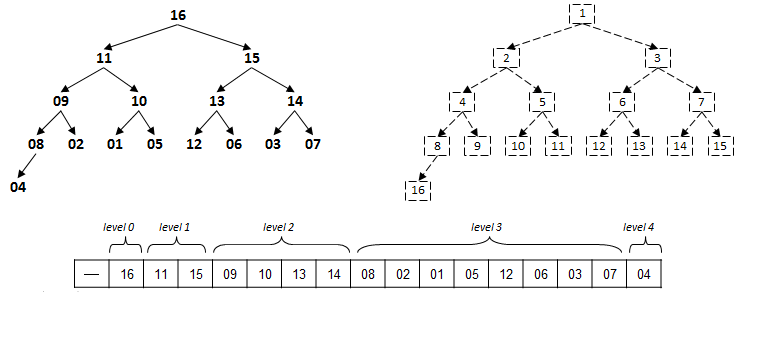

With this model in mind, there is a direct mapping of the values of a heap into an array. This can be visualized as follows:

Each value at index k has potentially two children at indices 2*k and 2*k+1. Alternatively, each value at index k > 1 has its parent node at index floor(k/2).

1.8 Heap Problem for HW2

You are to get some experience with the MaxPQ type, which you can find in the Git repository under today’s lecture.

There are two internal operations needed to maintain the structure of a heap. These can be called because the client has asked to:

Insert an element into the PQ

Delete the maximum element from the PQ

Reprioritize an existing element in the PQ

For now we focus on the mechanisms and you will see their ultimate use on the homework assignment and the lecture on Nov 12 2015.

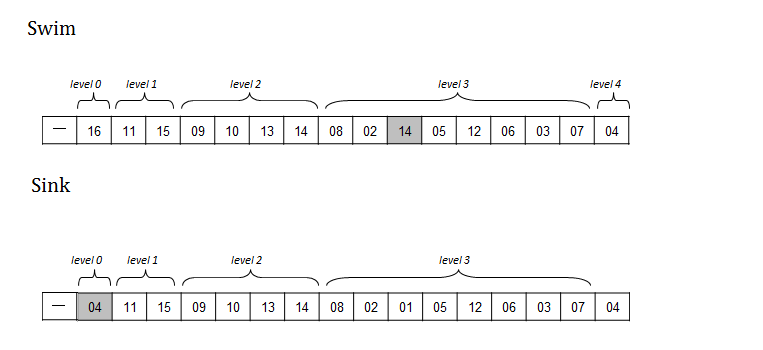

1.9 Swim – reheapify up

What if you have a heap and one of its values becomes larger than its parent. What do you do? No need to reorganize the ENTIRE array, you only need to worry about the ancestors. And since the heap structure is compactly represented, you know that (p. 314) the height of a binary heap is floor (log N).

1.10 Sink – reheapify down

What if you have a heap and one of its values becomes smaller than either of its (potentially) two children? No need to reorganize the ENTIRE array, you only have to swap this value with the larger of its two children (if they exist). Note this might further trigger a sink, but no more than log N of them.

1.11 Version : 2015/11/11

(c) 2015, George Heineman